The DRL Framework as a Standards Framework for People Analytics and AI Enablement Transformation

The DRL framework transforms People Analytics AI enablement from an ambiguous aspiration ("we need better data quality") into a standardised programme with clear specifications, measurable milestones, and validated outcomes. It provides the architectural standards organisations need to systematically build Predictive People Analytics and AI-ready people data infrastructure rather than hoping existing approaches will eventually support AI effectiveness.

By serving five key stakeholder communities with equal relevance, business organisations, academics, developers, policy makers, and vendors, the DRL framework creates the ecosystem-wide alignment necessary for responsible and effective People Analytics and AI deployment at scale.

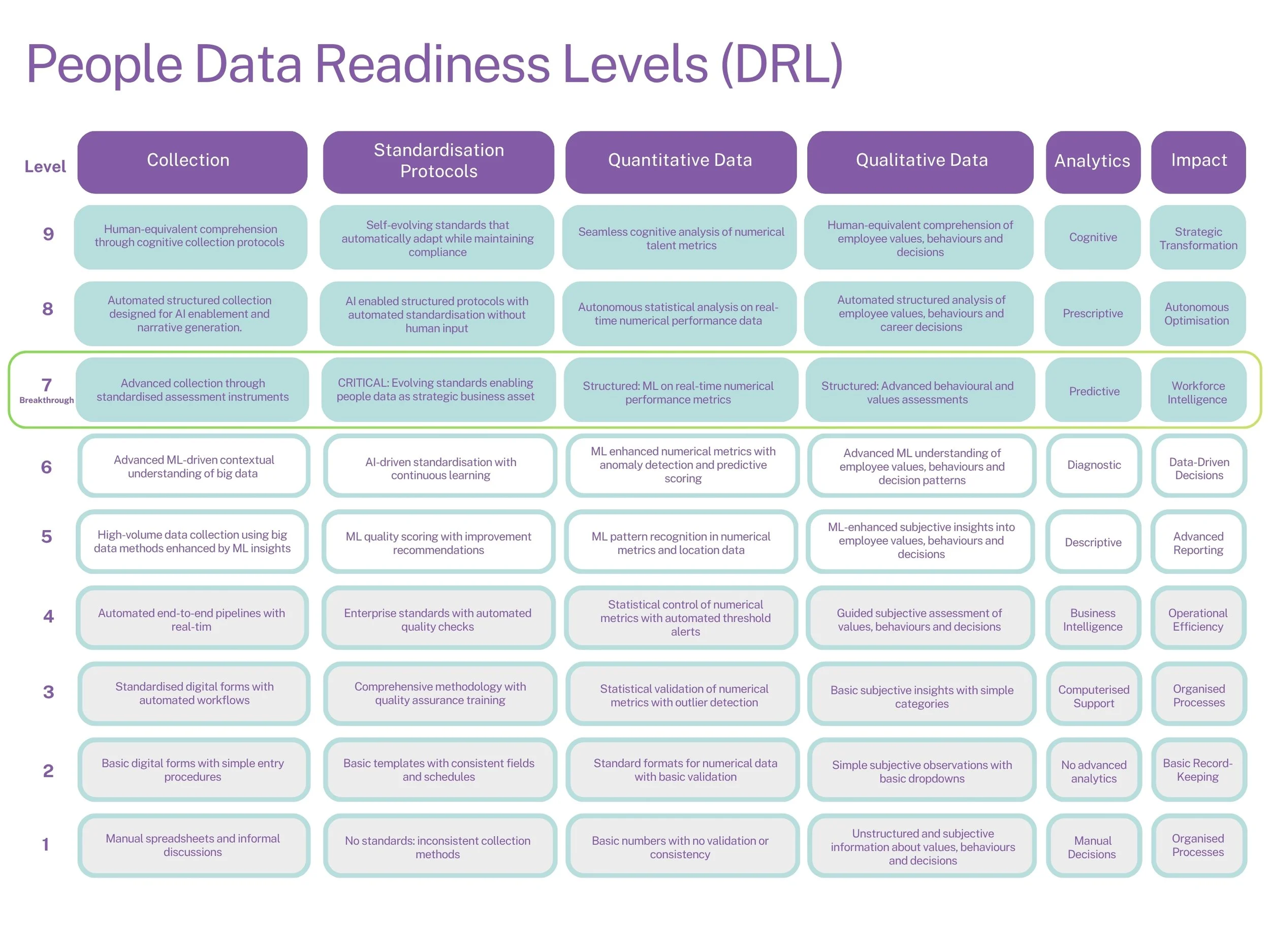

The Data Readiness Levels (DRL) framework functions as a maturity and standards model that provides organisations with:

1. Diagnostic Standardisation

Common assessment criteria across the ten root conditions

Benchmarking capability to identify current state (typically DRL 5-6 for most organisations)

Gap analysis structure showing distance from Predictive People Analytics and AI-ready state (DRL 7)

Objective measurement replacing subjective "data quality" assessments

2. Architectural Standards

The framework defines specific structural requirements at each level:

DRL 5-6 Standard: Data as technology by-product

System-generated logs and transactions

Reactive quality management

Analytics-ready ≠ AI-ready

DRL 7 Standard: Data as intentional product

Data-as-a-Product principles implemented

Proactive quality management (TDQM)

Predictive People Analytics and AI-consumption design criteria met across ten root conditions

This creates a clear specification for what "Predictive People Analytics and AI-ready people data architecture" actually means.

3. Implementation Roadmap Standards

The DRL framework provides a sequenced transformation pathway:

Phase 1: Assessment Against Standards

Audit current data collection against ten root conditions

Map existing practices to DRL levels

Identify which conditions block progression to DRL 7

Phase 2: DRL 7 Initiative Design

Using the framework's standards for each root condition:

Governance standards → Establish Data-as-a-Product Manager (DPM) roles with defined accountabilities

Collection standards → Redesign data capture to meet Predictive People Analytics and AI-consumption requirements

Quality standards → Implement Total Data Quality Management (TDQM) methodology systematically

Integration standards → Ensure interoperability across people data products

Phase 3: Validated Progression

Measure improvements against DRL criteria

Validate readiness for Predictive People Analytics and AI deployment

Demonstrate compliance with DRL 7 standards before people analytics and AI investment

4. Stakeholder Alignment Standards

The framework provides common language across five key stakeholder communities:

Business Organisations

Executive leadership → Investment requirements and returns at each DRL level

HR/People functions → Data ownership responsibilities and Data-as-a-Product accountability

IT/Technology teams → Infrastructure requirements supporting DRL 7 progression

Analytics teams → Data fitness criteria for predictive model deployment

Benchmarking capability → Compare organisational maturity against industry standards

Academics

Research standardisation → Comparable studies using consistent maturity definitions

Theoretical validation → Empirical testing of DRL progression hypotheses

Pedagogy framework → Teaching People Analytics using structured capability levels

Knowledge advancement → Collaborative exploration of DRL 8-9 capabilities

Evidence base → Longitudinal studies tracking AI effectiveness correlations

Developers

System design specifications → Technical requirements for DRL 7 architecture

API standards → Data interfaces designed for AI consumption

Quality assurance criteria → Automated validation against ten root conditions

Integration patterns → Standardised approaches for connecting Data Products

Tool validation → Benchmarking whether development tools enable DRL advancement

Policy Makers

Regulatory standards baseline → DRL 7 as minimum requirement for AI deployment in high-stakes decisions

Compliance frameworks → Auditable criteria for responsible AI deployment

Industry guidance → Evidence-based recommendations for AI readiness investment

Risk mitigation policy → Standards preventing premature AI deployment

Cross-jurisdiction alignment → Common framework enabling international policy coordination

Vendors

Procurement requirements → Clear specifications for systems supporting DRL 7 capabilities

Product roadmaps → Technical requirements preventing DRL 5-6 limitations

Certification standards → DRL 7 compliance validation for platforms

Competitive differentiation → Demonstrable support for Predictive People Analytics and AI-ready architecture

Customer alignment → Shared language for capability discussions

5. Quality Assurance Standards

DRL 7 establishes verifiable quality criteria:

The ten root conditions now have measurable standards

Progression requires demonstrated capability, not claimed maturity

Third-party assessment possible using standardised criteria

Prevents "checkbox compliance" through structural requirements

6. Risk Management Standards

The framework provides investment protection:

Pre-investment validation → Don't deploy AI until DRL 7 standards met

Failure prevention → Systematic addressing of root conditions before Predictive People Analytics and AI deployment

Resource allocation → Focus investment on structural readiness, not symptom treatment

ROI protection → Ensure Predictive People Analytics and AI deployment will function before procurement

Practical Application: The DRL 7 Initiative Framework

Standard Components Required

Organisations using DRL as a standards framework for their initiative must:

Establish baseline against all ten root conditions using DRL criteria

Design remediation programmes for each condition below standard

Implement Data-as-a-Product governance meeting DRL 7 specifications

Deploy Total Data Quality Management (TDQM) methodology systematically across people data

Validate achievement against DRL 7 criteria before Predictive People Analytics and AI deployment

Standard Governance Structure

Data-as-a-Product Council → Strategic oversight

Data-as-a-Product (DPM) Managers → Operational accountability

Quality Assurance Function → Standards compliance verification

Multi-Stakeholder Forum → Cross-functional and ecosystem alignment

Standard Success Metrics

Progression from DRL 5-6 to DRL 7 against each root condition

Predictive People Analytics performance and consistency

Reduction in data preparation requirements

Stakeholder confidence in AI enablement initatives and generated insights

Multi-Stakeholder Ecosystem Benefits

The DRL framework creates ecosystem-wide alignment where:

Businesses follow validated transformation pathways and benchmark progress

Academics conduct comparable research and advance theoretical understanding

Developers build to technical specifications enabling Predictive People Analytics and AI readiness

Policy makers establish evidence-based regulatory frameworks

Vendors design products that genuinely support DRL 7 capabilities

The Standards Advantage

By functioning as a standards framework, DRL enables organisations to:

✓ Avoid trial-and-error approaches → Follow validated transformation pathway

✓ Ensure consistency → All people data products meet same AI-readiness standards

✓ Enable comparison → Benchmark against other organisations using common framework

✓ Demonstrate compliance → Prove readiness using objective criteria

✓ Manage vendors → Specify requirements using standardised language

✓ Protect investment → Ensure prerequisites met before AI deployment

✓ Engage policy makers → Contribute to evidence-based regulation

✓ Advance research → Enable academic validation and knowledge creation

✓ Guide development → Provide technical specifications for AI-ready systems